Integrated Framework for Transformational Learning in the Era of Human-AI Interaction

Classical instructional design (ADDIE, SAM, Bloom's Taxonomy, Gagné's Nine Events) primarily addresses two dimensions:

This worked in the paradigm of "learning = information transfer from expert to novice." But this paradigm faces systemic limitations:

Symptom: Trained people don't apply

Root: Emotional layer not addressed

Symptom: Regression to old patterns

Root: Somatic layer not addressed

Symptom: Shame → hiding → dropout

Root: Social layer not addressed

Symptom: Can't continue learning

Root: Internal support not transferred

Symptom: Doesn't scale

Root: Ecosystem not designed

Symptom: No daily integration

Root: Practice loops not designed

The emergence of generative AI creates a fundamentally new situation:

Not a teacher replacement, but a new type of agent capable of continuing support after formal training

Without trust, people don't use AI or use it ineffectively (double-checking every response, not delegating)

Ability to "transfer" knowledge to an AI agent through structured artifacts (guides, prompts, knowledge bases)

Learning to work with AI requires changing identity, habits, ways of thinking — not just new knowledge

Context statistic: By various estimates, 70-95% of enterprise AI pilots fail to achieve deployment goals. The main reason is not technology, but people: fear, distrust, unwillingness to change how they work.

The framework integrates three major streams of thought

Contribution: mechanism of transfer from external to internal support

Contribution: multidimensional architecture, wholeness of personality

Contribution: goal of developing autonomy, meta-observation

Externally mediated action gradually becomes an internal mental act. The goal is forming internal capacity to orient, not dependence on external expert.

A person is not just a cognitive machine. Learning affects thinking, emotions, body, social connections. Ignoring any layer creates systemic failures.

Relationships (to self, others, AI) are not a "soft skill" but infrastructure with concrete parameters. Trust can be designed, measured, repaired.

Knowledge remaining only in a person's head is vulnerable and doesn't scale. Learning must design transfer into artifacts, teams, AI agents.

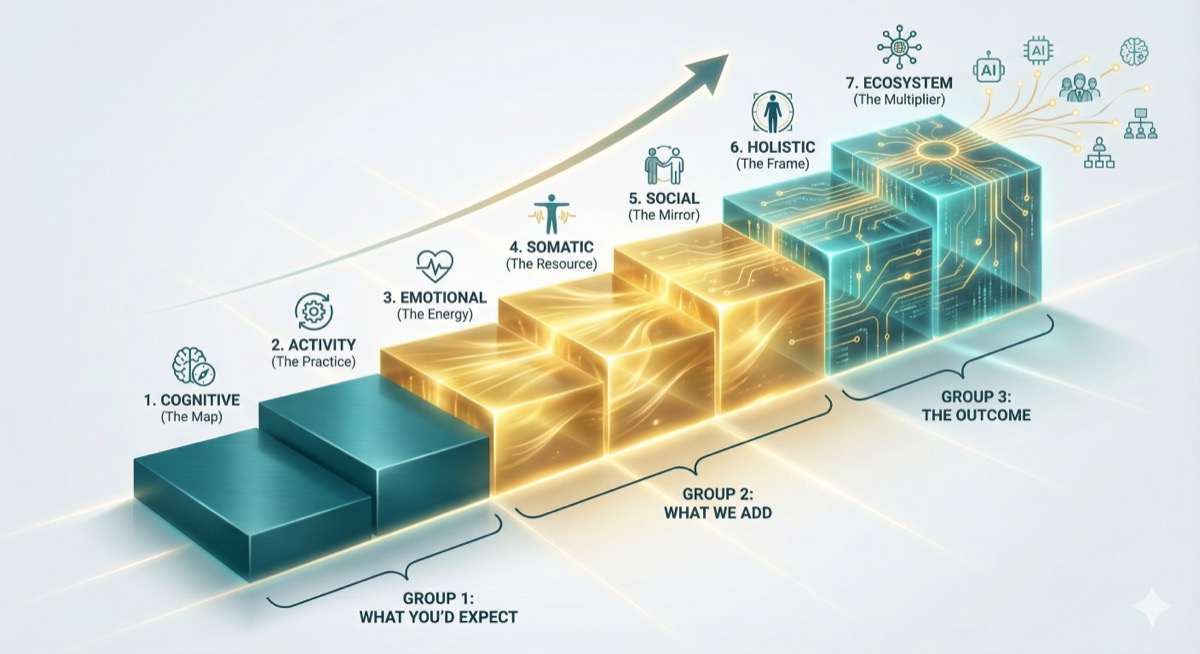

The framework addresses all dimensions of human development

Function: Clear mental model

Solutions: 1-3 principles, decision trees, boundaries of applicability

Function: Turning understanding into action

Solutions: Simulations, try→feedback→adjust cycles, success criteria

Function: Motivation, frustration tolerance

Solutions: Personal meaning, normalizing difficulty, "safe struggle"

Function: Energy and attention management

Solutions: Sustainable pace, designed recovery, embodied practice

Function: Support through connection

Solutions: Peer feedback, "others struggle too," communities of practice

Function: Forming the "inner teacher"

Solutions: Clear intention, explicit boundaries (no shame/burnout), exit quality > completion

Function: Scaling into ecosystem

Solutions: Tacit→explicit formalization, AI agent artifacts, team transfer

The seventh layer represents fundamental novelty

"Textbooks" that AI loads and uses to continue helping the person after formal training:

How human and AI work together:

How knowledge propagates beyond the individual:

The person exits with:

Trust is designable infrastructure with concrete parameters that can be measured and repaired

You ≠ your mistake. It's OK not to know.

Truth can be spoken without retaliation.

Delivered or renegotiated in advance.

Clear who decides what.

We discuss, we don't war.

"We can't" + honest plan.

Acknowledge → fix → change.

Mistakes allowed, hiding isn't.

Early warning signals visible.

Low trust = expensive (control, approvals, insurance)

High trust = cheaper coordination, faster decisions, more resilient in crisis

Each upper layer depends on the lower ones. You can't build the "inner teacher" (6) if the person is burned out (4) or ashamed to ask questions (5).

Trust Infrastructure permeates all layers:

| Cognitive | Trust in the model, in the knowledge source |

| Activity | Safety of error in practice |

| Emotional | Right to frustration without shame |

| Somatic | Right to pause, to "not now" |

| Social | Peer trust, group psychological safety |

| Holistic | Trust in self, internal support |

| Ecosystem | Trust in AI agent, in the system |

Principle: Trust in AI cannot be "installed" by lecture. It's grown through understanding AI's boundaries, practice with feedback, emotional readiness for AI errors, and accumulating successful experience.

What should NOT be reinforced

"Everyone got it, and you..."

"This is hard for everyone, let's work through it"

"Just try harder"

"Rest, we'll continue fresh tomorrow"

"Main thing is to finish the course"

"Goal is ability to keep learning"

Moral devaluation for error

Causes can be discussed; destroying dignity cannot

"AI will do everything for you"

Honest boundaries + development plan

Red Line Principle:

Legitimate: "No resources — we choose from options"

Illegitimate: "Do as we want, or else..."Learning must not use ultimatums, shame, blackmail — even for "noble purposes."

| Emotional | Participants openly acknowledge difficulties |

| Somatic | No burnout after intensives |

| Social | Peer discussions active, questions asked |

| Holistic | Continue learning on their own after training |

| Ecosystem | Knowledge appears in prompts/guides/agent configs |

| Trust | Scope of AI application grows, doesn't shrink |

Understanding, practice quality, energy, resource

Has behavior changed, is skill retained

Has trust in self and AI grown, is there autonomy, no negative residue

Training employees to work with AI

Where identity transformation is needed

Where there are no "right answers"

Where cognitive alone is insufficient

Where long-term support is needed

The proposed framework views learning not as information transfer, but as designing conditions for human transformation in the context of their relationships — with self, with other people, with AI, with the organization.

The framework integrates seven transformation layers (from cognitive map to ecosystem propagation) with nine trust infrastructure layers (from basic safety to observability).

Special focus is on the Ecosystem Layer: designing how learning continues after the formal course through the partnership of the person with an AI agent equipped with specially prepared knowledge artifacts.

The goal is not a certificate, but the "inner teacher": the person's ability to continue learning, adapting, and developing in partnership with AI and community.